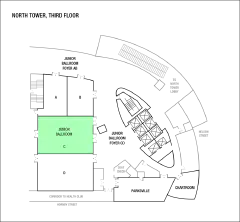

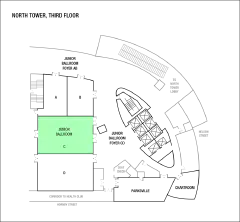

Junior Ballroom C

North Tower, 3rd floor

Given a group $G$ and $S \subset G$, the Cayley (di)graph Cay$(G,S)$ is the graph whose vertex set is $G$ with an arc from $g$ to $h$ if and only if $hg^{-1}\in S$. Although the graph isomorphism problem in general is only known to be quasipolynomial, for some families of Cayley (di)graphs it reduces to understanding the automorphisms of the groups that are involved. The question of when this happens is known as the Cayley Isomorphism (CI) problem.

I will provide an overview of the current state of the CI problem for both finite and infinite groups.

The celebrated Erdos-Ko-Rado theorem can be interpreted as a characterization of the size and structure of independent sets/co-cliques of maximum size in Kneser Graphs. Similar characterizations have been observed in a few other classes of Distance Regular Graphs. In this talk, we will consider a closely related problem of finding the size and structure of the biggest induced forest in a graph. This question is inspired by a graph process known as `Bootstrap Percolation’. In this process, given a fixed threshold $r$ and a set of ‘initially infected’ vertices, the states of vertices (either healthy or infected) are updated indiscrete time steps: any healthy vertex with at least $r$ infected neighbours becomes itself infected. An initially infected set of vertices percolates if the infection spreads to the entire graph. One of the extremal questions related to this process is to find the smallest percolating set. In the special case of $d$-regular graphs, when $r=d$, a set percolates exactly when its complement is independent. Meanwhile, in the case $r=d−1$, a set percolates if its complement is a set of vertices that induce a forest. We will characterize maximum induced forests in the Kneser graph and some other families of Distance Regular Graphs.

A linear space is a point-line incidence structure such that each pair of points is incident to exactly one line. It is regular if all lines are incident to the same number of points. (This is equivalent to a balanced block design with $\lambda=1$.)

I will discuss linear spaces which admit a very special class of groups of automorphisms, called extremely primitive groups. Together with Melissa Lee, we have almost finished the classification of these linear spaces. In the process, we discovered some new interesting constructions which I will describe.

A set of permutations $\mathcal{F} \subset Sym(V)$ is said to be intersecting if any two of its elements agree on some element of $V$. The intersection density, $\rho(G)$, of a finite transitive permutation group $G \leq Syn(V)$, is the maximum ratio $\frac{|\mathcal{F}| |V|}{|G|}$ where $\mathcal{F}$ runs through all intersecting sets of $G$. If the intersection density of a group is equal to 1, we say that a group has the Erdos-Ko-Rado property. The intersection density for many groups has been considered, mostly considering which groups have the Erdos-Ko-Rado property. In this talk I will consider the intersection density of a vertex-transitive graph which is defined to be the maximum value of $\rho(G)$ where is a transitive subgroup of the automorphism group of $X$. I will focus on the intersection density of the Kneser graph $K(n,3)$, for $n\geq 7$.

In 1975, Szemeredi proved that for every real number $\delta > 0 $ and every positive integer $k$, there exists a positive integer $N$ such that every subset $A$ of the set ${1, 2, \cdots, N }$ with $|A| \geq \delta N$ contains an arithmetic progression of length $k$. There has been a plethora of research related to Szemeredi’s theorem in many areas of

mathematics. In 1990, Cameron and Erdos proposed a conjecture about counting the number of subsets of the set ${1,2, \dots, N}$ which do not contain an arithmetic progression of length $k$. In the talk, we study a natural higher dimensional version of this conjecture by counting

the number of subsets of the $k$-dimensional grid ${1,2, \dots, N}^k$ which do not contain

a $k$-dimensional corner that is a

set of points of the

form ${ a } \cup { a+ de_i : 1 \leq i \leq k }$ for some $a \in {1,2, \dots, N}^k$ and $d > 0$, where $e_1,e_2, \cdots, e_k$ is the standard basis of $\mathbb{R}^k$.

Our main tools for proof are the hypergraph container method and the supersaturation result

for $k$-dimensional corners in sets of size $\Theta(c_k(N))$, where $c_k(N)$ is the maximum size of a $k$-dimensional corner-free subset of ${1,2, \dots, N}^k$.

We would like to know for which function f(k) it is true that any oriented graph of minimum semidegree at least f(k) necessarily contains a given oriented path with k edges. For the directed path, f(k)=k/2 works, and perhaps this is true for other orientations as well. We show that this is approximately the case for large antidirected paths, and more generally, for large antidirected trees of bounded maximum degree. This is joint work with Camila Zárate.

It is a classical problem to determine the minimum and maximum genus of a $2$-cell embedding of a graph $G$. However there will be many other embeddings with a genus between these two values: we will study the average genus across all of these embeddings.

We give an overview of recent work on the average genus for fixed graphs. We also discuss extensions of the problem to random graphs and random maps, and links with representation theory.

The symmetry of a discrete object (such as a graph, map or polytope, and even a Riemann surface or 3-manifold) can be measured by its automorphism group – the group of all structure-preserving bijections from the object to itself. (For example, an automorphism of a map takes vertices to vertices, edges to edges, faces to faces, and preserves incidence among these elements.) But also/conversely, objects with specified symmetry can often be constructed from groups.

In this talk I will give some examples showing how this is possible, and some methods which help, and describe some significant outcomes of this approach across a range of topics. Included will be some very recent developments, including a few unexpected discoveries.

The game of Cops and Robber is traditionally played on a finite graph. But one can define the game that is played on an arbitrary geodesic space (a compact, path-connected space endowed with intrinsic metric). It is shown that the game played on metric graphs is essentially the same as the discrete game played on abstract graphs and that for every compact geodesic surface there is an integer $c$ such that $c$ cops can win the game against one robber, and $c$ only depends on the genus $g$ of the surface. It is shown that $c=3$ for orientable surfaces of genus $0$ or $1$ and nonorientable surfaces of crosscap number $1$ or $2$ (with any number of boundary components) and that $c=O(g)$ and that $c=\Omega(\sqrt{g})$ when the genus $g$ is larger. The main motivation for discussing this game is to view the cop number (the minimum number of cops needed to catch the robber) as a new geometric invariant describing how complex is the geodesic space.

For $r\geq 1$, a graph has $r$-friendship property if every pair of vertices has exactly $r$ common neighbours. The motivation for this definition is from the Friendship theorem, which is on the graphs with $1$-friendship property. The Friendship theorem, first proved by Erdős, Rényi, and Sós in 1966, states that if $G$ is a graph in which every pair of vertices has exactly one common neighbour, then $G$ has a universal vertex $v$ adjacent to all others, and the graph induced by $V(G)\setminus {v }$ is a matching. In this presentation, we study graphs with $r$-friendship property, where $r\geq 2$. We show all such graphs are strongly regular. Furthermore, we prove that for any $r\geq 2$, there are only finitely many graphs with $r$-friendship property. This is an ongoing joint work with Karen Gunderson.

Graphs having three distinct eigenvalues are a fundamental object of study in spectral graph theory. Strongly regular graphs are the most well-studied examples. In 1995, at the 15th British Combinatorial Conference, Willem Haemers asked do there exist any connected graphs having three distinct eigenvalues apart from strongly regular graphs and complete bipartite graphs. Haemer’s question prompted responses from Muzychuk-Klin and Van Dam who found new families of nonregular graphs having three distinct eigenvalues.

Muzychuk and Klin initiated the study of a graph with three distinct eigenvalues via its Weisfeiler-Leman closure. They classified such graphs whose Weisfeiler-Leman closure has rank at most 7. In this talk, we extend this classification up to rank 9. Our results include a correction of the literature (where the rank 8 case was erroneously claimed to be impossible) and discussion of further study.

When considering proper colourings of oriented graphs, one possible approach is to insist that the colouring be consistent with the orientation: all edges between two colour classes should be oriented in the same way. The oriented chromatic number of an oriented graph is the least $k$ so that there is a homomorphism from the oriented graph to some tournament of order $k$. In this talk, I will present new work with Nir on the oriented chromatic number of random models of oriented graphs of bounded degree. Previous extremal results can be used to bound the oriented chromatic number of a random $d$-regular oriented graph between $\Omega(\sqrt{2}^d)$ and $O(d^2 2^d)$. Using colourings by doubly-regular tournaments, we improve the upper bound to $O(\sqrt{e}^d)$. I will present these results and discuss an optimization result for functions over doubly stochastic matrices that extends the optimization result of Achlioptas and Naor that was central to results on chromatic numbers of unoriented random graphs.

One of the central objects of interest in additive combinatorics is the sumset $A+B= {a+b:a \in A, b \in B}$ of two sets $A,B$ of integers. Our main theorem, which improves results of Green and Morris, and of Mazur, implies that the following holds for every fixed $\lambda > 2$ and every $k>(\log n)^4$: if $\omega$ goes to infinity as $n$ goes to infinity (arbitrarily slowly), then almost all sets $A \subseteq [n]$ with $|A| = k$ and $ |A + A| < \lambda k$ are contained in an arithmetic progression of length $\lambda k/2 + \omega$. This is joint work with Marcelo Campos, Mauricio Collares, Rob Morris and Victor Souza.

In classical homotopy theory, two spaces are considered homotopy equivalent if one space can be continuously deformed into the other. This theory, however, does not respect the discrete nature of graphs. For this reason, a discrete homotopy theory that recognizes the difference between the vertices and edges of a graph was invented. This theory is called A-homotopy theory in honor of Ron Aktin, who created the foundations of this theory in Q-analysis in the 1970s. A-homotopy theory allows us to compare graphs and to compute invariants for graphs. The intended use of these invariants is to find areas of low connectivity in a large network where information might be missing or where the network might be made more efficient.

In algebraic topology, each space $X$ has associated spaces $\widetilde{X}$ and continous maps $p: \widetilde{X} \to X$, and each pair together $(\widetilde{X}, p)$ is called a covering space of $X$. Under certain conditions, a space has a covering space with special properties, called a universal cover. Among other things, universal covers allow us to factor maps between topological spaces, which can be quite useful. In this talk, I will give a brief introduction to A-homotopy theory and discuss the universal covers I developed for graphs with no 3 or 4-cycles as well as the covering graphs obtained from quotienting these universal covers. I will end by mentioning some of the useful properties of these universal covers.

Among infinitely many factorizations A=VV* of a psd matrix A, we seek the factor V that has the smallest (1,2) norm. In this talk we review the origin of this problem as well as existing results regarding the optimal value. We discuss also the conjecture that the squared (1,2) norm of V is equivalent to the (1,1) norm of A.

Over the last few decades, the (unconstrained) LASSO $$ \min_{x\in \mathbb{R}^n} \frac12 \lVert A x-b\rVert_2^2 + \lambda \lVert x\rVert_1, $$ has become an indispensable tool in statistical learning, data science, and signal processing, thanks to its ability to efficiently recover sparse approximate solutions to (underdetermined) linear systems.

In this talk, we will present a novel variational analysis of this popular optimization program. First, we will establish smoothness results as well as Lipschitz properties for the optimal value and optimal solution maps of the LASSO as functions of the measurement vector $b$, the sampling matrix $A$, and, most notably, the tuning parameter $\lambda$. Then, we will illustrate how to apply the proposed variational analysis in the context of compressed sensing, validating our theoretical findings with numerical experiments. Finally, we will discuss extensions to related optimization problems such as the the square-root LASSO.

Previous work has shown that a neural network with the rectified linear unit (ReLU) activation function leads to a convex polyhedral decomposition of the input space. In this talk, we will see how one can utilize this structure to detect and analyze adversarial attacks in the context of digital images.

When an image passes through a network containing ReLU nodes, the firing or non-firing at a node can be encoded as a bit ($1$ for ReLU activation, $0$ for ReLU non-activation). The sequence of all bit activations identifies the image with a bit vector, which identifies it with a polyhedron in the decomposition. We identify ReLU bits that are discriminators between non-adversarial and adversarial images and examine how well collections of these discriminators can ensemble vote to build an adversarial image detector and also present further applications of this induced geometry.

The remarkable successes of neural networks in a huge variety of inverse problems have fueled their adoption in disciplines ranging from medical imaging to seismic analysis over the past decade. However, the high dimensionality of such inverse problems has simultaneously left current theory, which predicts that networks should scale exponentially in the dimension of the problem, unable to explain why the seemingly small networks used in these settings work as well as they do in practice. To reduce this gap between theory and practice, we provide a general method for bounding the complexity required for a neural network to approximate a Lipschitz function on a high-dimensional set with a low-complexity structure. The approach is based on the fact that many sets of interest in high dimensions have low-distortion linear embeddings into lower dimensional spaces. We can exploit this fact to show that the size of a neural network needed to approximate a Lipschitz function on a low-complexity set in a high dimensional space grows exponentially with the dimension of its low-distortion embedding, not the dimension of the space it lies in.

The goal of Optimal Recovery is to uncover procedures that are worst-case optimal for the task of learning / recovering unknown functions from a priori information (the model) and a posteriori information (the observations). Optimal recovery procedures can sometimes be chosen as linear maps, at least when the observations are accurate. When they are corrupted, however, the problem becomes more complicated, especially if the focus is put on the practical construction of the (near) optimal procedure. This talk showcases positive results in three situations: deterministic observation errors bounded in $\ell_2$; deterministic observation errors bounded in $\ell_1$; and stochastic log-concave observation errors.

When the data is large, or comes in a streaming way, randomized iterative methods provide an efficient way to solve a variety of problems, including solving linear systems, finding least square solutions, optimizing with gradient descent and Newton methods, solving feasibility problems and beyond. However, if the data is corrupted with arbitrarily large sparse errors, one can expect the iterates are diverted far from the solution with each corrupted equation they encounter. I am going to talk about our recently proposed robust versions of Randomized Kaczmarz and Stochastic Gradient Descent methods for solving linear systems that avoid harmful corrupted equations by exploring the space as they proceed with the iterates. We show that the convergence rates in expectation are comparable with the convergence of the vanilla methods on uncorrupted systems. I will also touch on how to use the information obtained on the exploration phase efficiently, and what structural characteristics of the data matrix are crucial for such methods. Based on the joint work with Deanna Needell, Jamie Haddock, Will Swartworth, Ben Jarman, and Lu Cheng.

Quantization is one of the compression techniques to reduce computation cost, memory, and power consumption of deep neural networks (DNNs). In this talk, we will focus on a post-training quantization algorithm, GPFQ, that is based on a deterministic greedy path-following mechanism, and its stochastic variant SGPFQ. In both cases, we rigorously analyze the associated error bounds for quantization and show that for quantizing a single-layer network, the relative square error essentially decays linearly in the number of weights – i.e., level of over-parametrization. To empirically evaluate the method, we quantize several common DNN architectures with few bits per weight, and test them on ImageNet, showing only minor loss of accuracy compared to unquantized models.

Shannon’s coding theorem establishes conditions under which a given stochastic source can be encoded by a given stochastic channel with zero probability of error. One can also consider a deterministic channel, given by a function from one space of sequences to another, and ask which sources can be transmitted by that channel not just with zero error probability but in fact injectively. Working with an existing formalization of this idea in symbolic dynamics, I will present a characterization of the subshifts that can be transmitted injectively by a given sliding block code on a mixing shift of finite type (i.e. the space of sequences realizable by an ergodic Markov chain). The result generalizes a classical embedding theorem of Krieger and answers a question posed to me by Tom Meyerovitch.

Sparse polynomial methods have, over the last decade, become widely used tools for approximating smooth, high-dimensional functions from limited data. Notable applications include parametric modelling and computational uncertainty quantification, discovering governing equations of dynamical systems, and high-dimensional PDEs. A standard sparse polynomial approximation scheme involves solving a convex optimization problem involving a $\ell^1$- or weighted $\ell^1$-norm. This is typically done using first-order methods. However, such methods generally converge very slowly, making them undesirable for the many applications that require high accuracy. Motivated by this problem, in this talk I will describe a general scheme for accelerating first-order optimization algorithms. This scheme applies to any convex optimization problem possessing a certain $\textit{approximate sharpness}$ condition and essentially any first-order optimization algorithm. For a wide range of problems, i.e., not just $\ell^1$-minimization-type problems, this scheme achieves either optimal rates of convergence or improves on previously established rates. To emphasize its usefulness and generality, I will showcase applications in compressive imaging and machine learning.

A common approach for compressing large-scale data is through matrix sketching. In this talk, we consider the problem of recovering low-rank matrices or tensors from noisy sketches. We provide theoretical guarantees characterizing the error between the output of the sketching algorithm and the ground truth low-rank matrix or tensor. Applications of this approach to synthetic data and medical imaging data will be presented.

Recently several streaming algorithms for computing low-rank Tucker approximations of large tensors have been introduced by several researchers including Tropp, Udell, et al., De Lathauwer et al., and others. In this talk we will review the basic approach used by these works highlighting their similarities, and then present generalized theoretical guarantees proving their accuracy on full-rank and noisy data with high probability from a wide range of measurements. Some of our newly proposed structured measurements have the advantage of reducing the overall memory needed to sketch and recover large, potentially streamed, tensors and can also be chosen to economize sketching and recovery time. In particular, our recovery algorithms (after measurements have been collected) are sublinear-time in the overall tensor size. In addition, we improve upon prior works by providing stronger recovery error bounds which apply to a much more general class of measurements than previously analyzed. Our numerical experiments further highlight the value of these new measurement choices in various problem scenarios.

We will discuss variants of matrix and tensor CUR decompositions and algorithms for Robust PCA and matrix completion that allow one to observe only submatrices or subtensors of the data. By subsampling modes, we can obtain algorithms with state-of-the-art runtime for these tasks. Sample applications to image and video processing will be discussed.

We study compressed sensing when the signal structure is the range of a (pre-trained) generative neural network (GNN) and give the first signal recovery guarantees when the measurements are subsampled from an orthonormal basis. This includes the subsampled Fourier transform as a special case, thus allowing a measurement model in line with applications such as MRI. We define a coherence parameter which depends upon the alignment of the orthonormal basis and the range of the GNN and show that small coherence implies robust signal recovery from relatively few measurements. Numerical experiments verify that regularizing to keep the coherence parameter small while training the GNN can improve performance in the signal recovery stage.

We will introduce a mathematical signal transform based on Optimal Transport Theory. It builds upon the existing Cumulative Distribution Transform by Park, Kolouri, Kundu, and Rohde (2018). We will present both forward (analysis) and inverse (synthesis) formulas for this nonlinear transform, and describe several of its properties including translation, scaling, convexity, and linear separability. Indeed, since this tool is a new signal representation based on Optimal Transport theory, it has suitable properties for decoding information related to certain signal displacements. We will describe a Wasserstein-type metric in transform space, and show applications in classifying (detecting) signals under random displacements, and parameter estimation problems for certain types of generative models.

Geometric Deep Learning is an emerging field of research that aims to extend the success of convolutional neural networks (CNNs) to data with non-Euclidean geometric structure such as graphs and manifolds. Despite being in its relative infancy, this field has already found great success and is utilized by e.g., Google Maps and Amazon’s recommender systems.

In order to improve our understanding of the networks used in this new field, several works have proposed novel versions of the scattering transform, a wavelet-based model of neural networks for graphs, manifolds, and more general measure spaces. In a similar spirit to the original Euclidean scattering transform, these geometric scattering transforms provide a mathematically rigorous framework for understanding the stability and invariance of the networks used in geometric deep learning. Additionally, they also have many interesting applications such as discovering new drug-like molecules, solving combinatorial optimization problems, and using single-cell data to predict whether or not a cancer patient will respond to treatment.

We consider the nonlinear inverse problem of learning a transition operator ${A}$ from partial observations across different time scales, or in other words, from {sparse observations of its powers ${A},\cdots,{A}^{T-1}$. This Spatio-Temporal Transition Operator Recovery problem is motivated by the recent interest in learning time-varying graph signals that are driven by graph operators depending on the underlying graph topology. We address the non-linearity issue by embedding the problem into a higher-dimensional space of suitable block-Hankel matrices, where it becomes a low-rank matrix completion problem, even if ${A}$ is of full rank. For both a uniform and an adaptive random space-time sampling model, we quantify the recoverability of the transition operator via suitable measures of incoherence of these block-Hankel embedding matrices. For graph transition operators these measures of incoherence depend on the interplay between the dynamics and the graph topology. We establish quadratic local convergence analysis of a suitable non-convex iterative reweighted least squares (IRLS) algorithm, and show that in optimal scenarios, no more than $\mathcal{O}(rn \log(nT))$ space-time samples are sufficient to ensure accurate recovery of a rank-$r$ operator ${A}$ of size $n \times n$. This establishes that {spatial samples} can be substituted by a comparable number of {space-time samples}. We provide an efficient implementation of the proposed IRLS algorithm with space complexity of order $O(r n T)$ and whose per-iteration time complexity is {linear} in $n$. Numerical experiments for transition operators based on several graph models confirm that the theoretical findings accurately track empirical phase transitions, and illustrate the applicability of the proposed algorithm.